Introduction

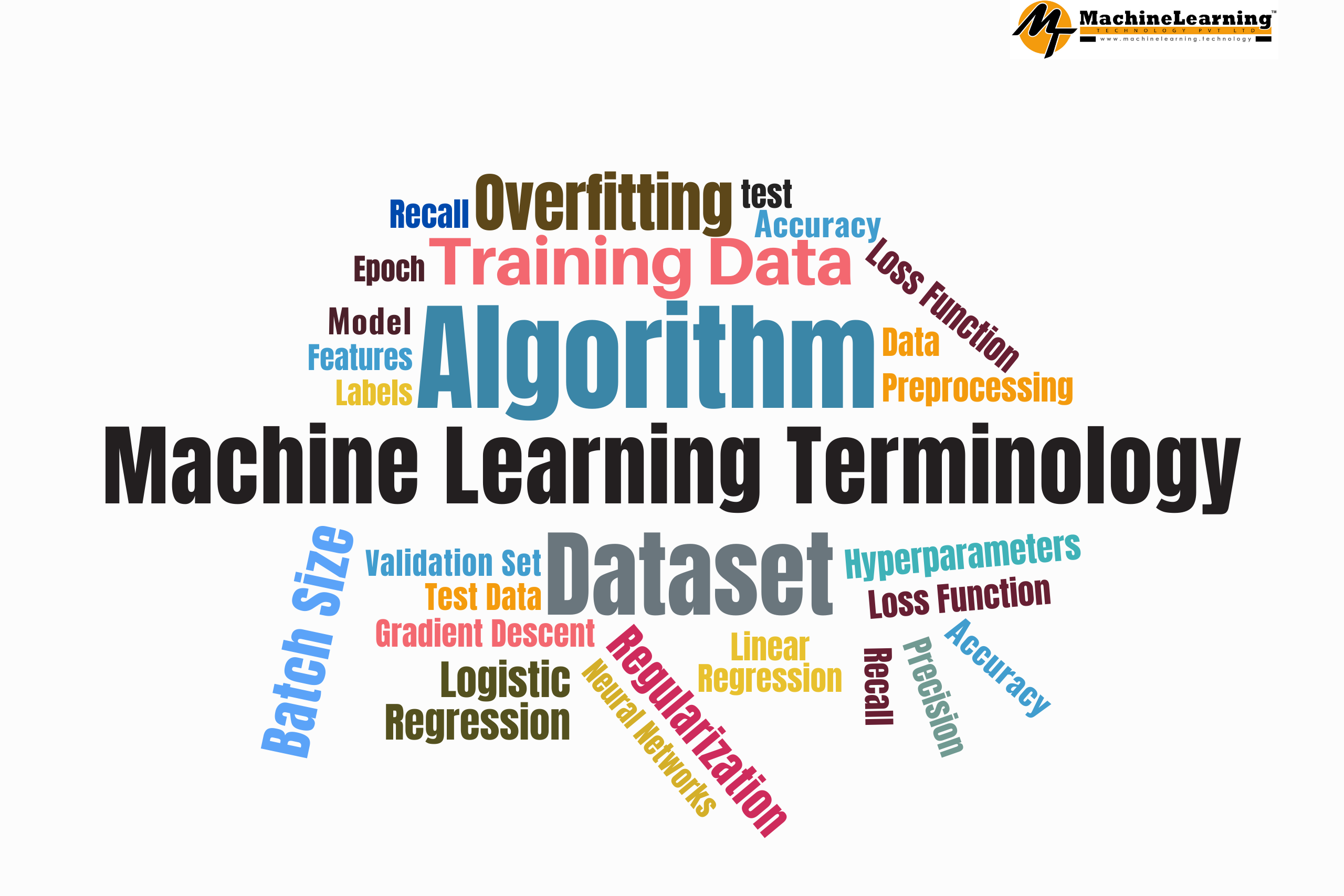

Machine learning (ML) can seem like a confusing maze of jargon when you’re just starting out. Terms like “training data,” “overfitting,” and “hyperparameters” can feel overwhelming, even for students or researchers venturing into the field of artificial intelligence.

Understanding the terminology is the first step to mastering machine learning and feeling confident while reading papers, building models, or discussing AI with peers. In this blog, we’ll break down the most common machine learning terms in plain English to help you navigate the world of ML like a pro.

Let’s get started and decode the language of machine learning together!

What is Machine Learning Terminology?

Machine learning terminology refers to the set of specialized terms and concepts used to describe various components, processes, and techniques within the field of machine learning. Just like every subject has its own vocabulary—physics has “force” and “momentum,” while mathematics has “derivatives” and “integrals”—machine learning has its own unique lexicon.

Grasping these terms is crucial for anyone working in or studying ML because they provide a common language for discussing how machine learning systems are built, trained, and evaluated.

For example:

- When someone says “train the model,” they’re referring to the process of teaching an algorithm using data.

- When discussing “features,” they’re talking about the input variables that influence predictions.

Understanding this terminology not only makes it easier to learn the subject but also allows you to communicate effectively in professional and academic environments.

In the following sections, we’ll break down these key terms in a simple, approachable way.

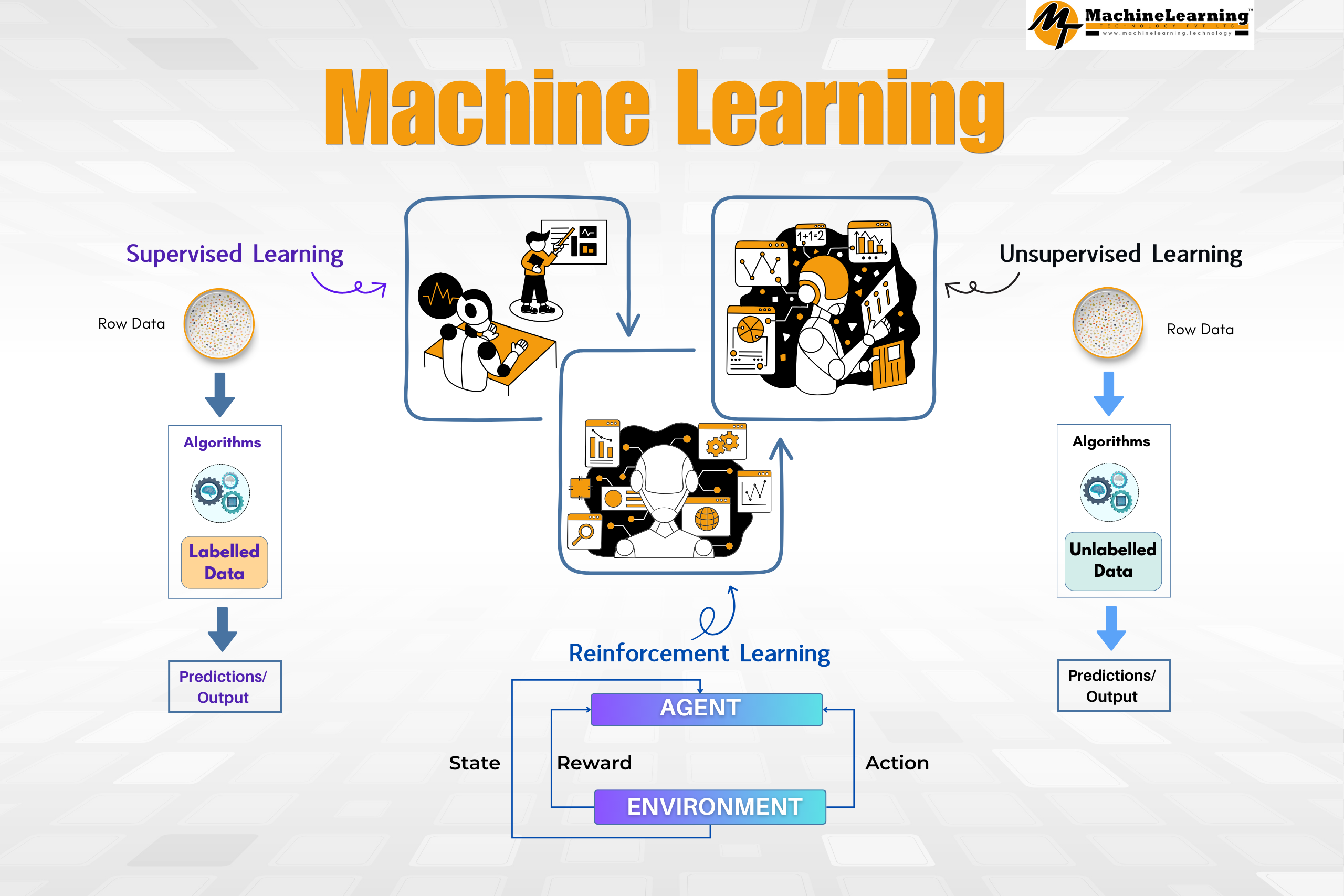

1. Algorithm

An algorithm in machine learning refers to the set of rules or instructions the computer uses to learn from data. Think of it as a recipe that tells the machine how to find patterns and make predictions.

- Example: Linear regression, decision trees, and neural networks are all popular ML algorithms.

- Why It Matters: The choice of algorithm determines how the machine will process data and solve problems.

2. Model

A model is the output of a machine learning algorithm after it has been trained on data. It’s essentially the brain of your ML system that can make predictions or decisions based on what it has learned.

- Example: A model trained to recognize cats in photos can identify a cat in new images.

- Why It Matters: Models are what we deploy to make real-world applications work, such as chatbots or recommendation systems.

3. Training Data

Training data is the dataset used to teach a machine learning algorithm. It contains examples, often paired with labels, that the model uses to learn patterns and relationships.

- Example: A dataset of emails labeled as “spam” or “not spam” trains a spam filter.

- Why It Matters: The quality and quantity of training data play a huge role in the model’s performance.

4. Features

Features are the input variables or attributes used to make predictions. These could be numbers, categories, or even images that describe the data.

- Example: If you’re predicting house prices, features could include the number of bedrooms, square footage, and location.

- Why It Matters: Selecting the right features (a process called feature engineering) directly impacts the accuracy of your model.

5. Labels

Labels are the outputs or answers in a supervised learning dataset. They are the values the model is trying to predict or classify.

- Example: For email spam detection, the labels would be “spam” or “not spam.”

- Why It Matters: Without labels, supervised learning cannot function effectively.

6. Overfitting

Overfitting occurs when a model learns the training data too well—even its noise and irrelevant details. As a result, it performs poorly on new, unseen data.

- Example: A model that memorizes specific data points instead of understanding patterns will overfit.

- Why It Matters: Overfitting makes the model useless in real-world applications. Techniques like cross-validation and regularization can help prevent it.

7. Underfitting

Underfitting happens when a model is too simple to capture the underlying patterns in the data. This leads to poor performance on both training and test data.

- Example: A linear regression model used to capture a highly complex, nonlinear relationship will underfit.

- Why It Matters: An underfitted model fails to deliver meaningful predictions.

8. Hyperparameters

Hyperparameters are the settings or configurations you specify before training a machine learning model. They influence how the algorithm learns from data but are not learned by the model itself.

- Example: Learning rate, the number of layers in a neural network, or the depth of a decision tree.

- Why It Matters: Tuning hyperparameters can significantly improve a model’s performance.

9. Loss Function

A loss function measures how far off a model’s predictions are from the actual values. It’s the “error” the algorithm tries to minimize during training.

- Example: Mean Squared Error (MSE) is a common loss function for regression tasks.

- Why It Matters: The choice of loss function impacts how the model is trained and its final accuracy.

10. Gradient Descent

Gradient Descent is an optimization algorithm used to minimize the loss function and improve the model’s predictions. It adjusts the model’s parameters (like weights) to reduce errors gradually.

- Example: Gradient descent helps a neural network learn by tweaking weights after each iteration.

- Why It Matters: It’s the backbone of many machine learning algorithms, especially deep learning.

11. Validation Set

A validation set is a subset of data used during training to evaluate the model’s performance and fine-tune it.

- Example: If you have 1,000 data points, you might use 800 for training, 100 for validation, and 100 for testing.

- Why It Matters: It helps prevent overfitting by ensuring the model performs well on unseen data.

12. Test Data

Test data is the final dataset used to evaluate a trained model. It’s separate from the training and validation sets to ensure an unbiased evaluation of the model’s performance.

- Example: Once your model is trained and validated, you test it on new data to check how well it generalizes.

- Why It Matters: Test data provides a true measure of how well your model will perform in real-world scenarios.

13. Accuracy, Precision, and Recall

These are performance metrics used to evaluate a model:

- Accuracy: The percentage of correct predictions.

- Precision: Out of all predicted positives, how many are actually positive.

- Recall: Out of all actual positives, how many were correctly predicted.

- Why It Matters: Depending on the task, you may prioritize precision (e.g., in spam detection) or recall (e.g., in medical diagnostics).

14. Neural Networks

A neural network is a type of machine learning model inspired by the human brain. It consists of layers of nodes (neurons) that process data and extract patterns.

- Example: Neural networks power technologies like facial recognition and language translation.

- Why It Matters: They are key to solving complex problems in image recognition, natural language processing, and more.

15. Regularization

Regularization is a technique used to prevent overfitting by adding a penalty for overly complex models.

- Example: Techniques like L1 and L2 regularization adjust model parameters to simplify the model.

- Why It Matters: Regularization helps build models that generalize well to unseen data.

Tips for Learning Machine Learning Terminology

- Practice with Examples: Apply these terms in real-world scenarios or projects.

- Create a Glossary: Maintain a personal glossary of terms for quick reference.

- Join Communities: Participate in forums or groups like Kaggle, Stack Overflow, or Reddit’s r/Machine Learning.

- Learn Gradually: Focus on understanding terms one at a time instead of trying to memorize them all at once.

Final Thoughts

Mastering machine learning terminology is like learning a new language—it takes time, but once you’re familiar with the key terms, everything starts to make sense. Understanding these concepts will not only boost your confidence but also help you collaborate better with peers, interpret research papers, and build impactful machine learning models.

So, dive in, explore, and let the language of machine learning become second nature to you.