It is known that an AI model performs better with labeled data, but collecting and annotating data is often resource intensive. Innovative concepts such as zero-shot and few-shot learning resolve these challenges by allowing a model to learn from little to no labeled data. This is why I wanted to share my learning on zero- and few-shot learning and how it is evolving AI as we know it.

What is Zero-Shot Learning (ZSL)?

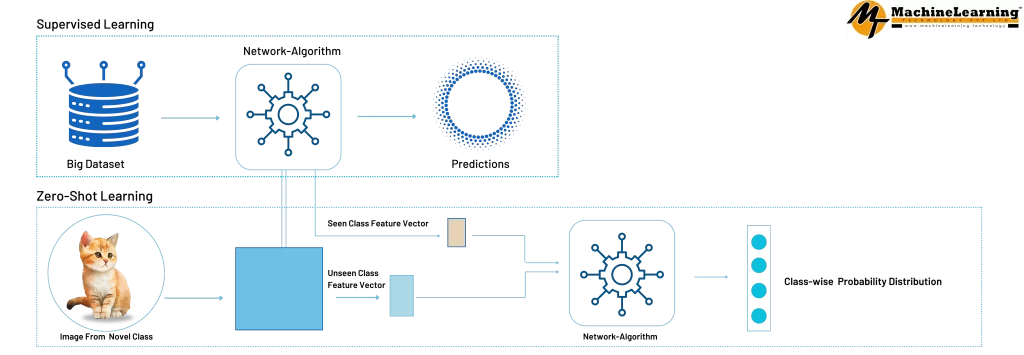

Zero-shot learning (ZSL) allows a model to predict classes it has never encountered. Instead of using direct examples, ZSL effectively generalizes new categories by retrieving labeled examples from semantic knowledge bases, such as word embeddings, descriptions, or attribute-based learning.

How Does Zero-Shot Learning Work?

The concepts within a Zero-Shot Learning paradigm are grouped into the following categories:

- Seen Classes: These data classes have already been utilized to train the deep learning model’s classes.

- Unseen Classes: The existing deep model has to learn to generalize these classes of data, which were not part of the training data.

- Auxiliary Information: Some auxiliary information must be provided to achieve the Zero-Shot Learning goal, as no labeled instances are related to the unseen classes. This auxiliary information form should describe all unseen courses, such as descriptions, semantic information, or word embeddings.

How to choose a Zero-Shot Learning method?

In practice, selecting a Zero-Shot Learning (ZSL) method will depend on the purpose of the task, the data available, and the resources on hand. Here’s a short guide to help you choose an appropriate ZSL method:

Refining the Problem:

- Standard ZSL: A case where the test samples only consist of unseen classes.

- Generalized ZSL (GZSL): A case where the test samples consist of both seen and unseen classes, making it more challenging.

Getting Acquainted with Your Data:

- Semantic attributes describe a class using descriptors such as “striped,” “four-legged,” etc.

- Textual Descriptions: Explanation using text describing the class.

- Models like Word2Vec, GloVe, or BERT generate word embeddings for use.

Scenario-based Method Selection:

- Attribute-based ZSL: Appropriate when attributes that describe the class are available.

Illustrations: DAP (Direct Attribute Prediction), ESZSL.

- Embedding-based ZSL: When, in addition to the class name, the descriptor is also present, this method is used.

Illustrations: DeViSE, ALE, SAE.

- Generative-based ZSL (GAN/VAE): Used when there is a need to create composite instances of unknown classes.

Illustrations: f-CLSWGAN, CVAE-ZSL.

Evaluate Method Complexity:

- Usually, the embedding-based methods are more straightforward and faster to implement.

- Generative methods (GAN/VAE) typically perform better but are more computationally expensive.

Consider Domain Specificity:

- Generative models tend to excel in performance for visual tasks.

- For NLP tasks, approaches based on embedding perform the best.

Performance & Interpretability:

- Automated tools make interpretability easier: methods based on attributes are more interpretable.

- Generative methods, like those based on interviews and takeovers, often have the best accuracy and lowest level of interpretability.

Quick Decision Guide:

| Scenario / Data Available | Recommended Method |

| Clear Attributes | Attribute-based methods like DAP or ESZSL are advisable. |

| Textual Data (descriptions) | Use text embedding based methods like DeViSE or GloVe/BERT embeddings. |

| Need High Accuracy | Generative methods like GANs or VAEs are needed. |

| Limited Resources or Fast Deployment | Use embedding methods. |

What is Few-Shot Learning (FSL)?

Few-shot learning (FSL) is a type of machine learning in which a model can learn new tasks subject to a few labeled examples. FSL doesn’t require the same data as traditional machine learning approaches because it tries to generalize from far less data.

How Does Few-Shot Learning Work?

In few-shot learning, the model learns to manage new tasks or concepts using only a small number of examples for each class (often between 1 and a few dozen samples). This approach is practical when collecting large amounts of labeled data is costly or impractical.

Here’s how few-shot learning generally works:

Meta-Learning (also known as Learning to Learn)

Meta-learning is at the heart of few-shot learning paradigms and strives to help the model learn how to learn new tasks based on previously learned tasks. The rough, bird’s-eye view outline consists of the following learning phases:

Training phase:

- The model is trained on many tasks, each with a few examples.

- The model tries to achieve a high-level generalization of how to adapt to new tasks by finding patterns across dissimilar tasks.

Testing phase:

- The model encounters an utterly novel task and, using his generalized meta-knowledge attempts to accomplish it using only a few labeled examples.

Common Methods in Few-Shot Learning

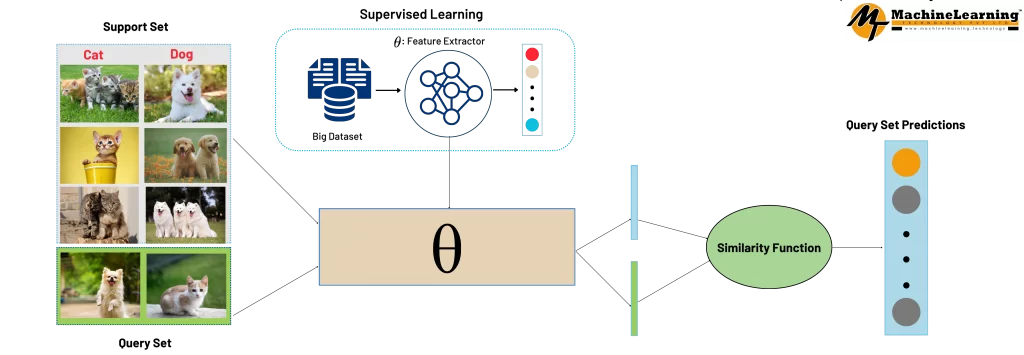

Metric-based methods (e.g., Prototypical Networks, Siamese Networks):

- The outline of these methods attempts to calculate a similarity metric between samples and then trust this metric.

- When a new task arrives, the model solves it by classifying new samples based on their similarity to the few labeled examples (support set).

Optimization-based methods (e.g., Model-Agnostic Meta-Learning—MAML):

- The model is trained with the explicit goal of rapidly adapting to new scenarios.

- It learns specific parameters that can be changed with a few gradient steps to accomplish new tasks.

Parameter-generation methods:

- Another neural network analyzes a few examples and sets the parameters or weights of the main network to enhance its performance.

Typical Workflow

A typical workflow of few-shot learning consists of the following steps:

- Support Set: A small set of labeled examples for learning a new task.

- Query Set: A set of unlabeled examples the model must classify after learning from the support set.

Let’s take an example: You aim to categorize certain uncommon species of birds. You only have three labeled images of each new bird species that you provide as a support set. In response, the model correctly classifies many other photos of these species that it has never seen before (query set) because it changes its internal representation of these species by using a few examples.

Key Differences Between Zero-Shot and Few-Shot Learning

| Aspect | Zero-Shot Learning (ZSL) | Few-Shot Learning (FSL) |

| Labeled Data | No labeled examples for new tasks/classes | Very limited labeled examples for new tasks/classes |

| Approach | Uses semantic attributes or knowledge transfer | Employs meta-learning or similarity-based techniques |

| Human Analogy | Understanding concepts via descriptions or knowledge without examples | Learning quickly from just a few examples |

| Applicability | Completely unseen scenarios | Rare or scarce data scenarios |

| Example Scenario | Identifying an animal species never seen before based on attributes like “four legs” or “striped” | Recognizing a flower species after seeing only a couple of examples |

Why Are Zero-Shot and Few-Shot Learning Important?

- Reduced Dependency on Data—Eliminating the requirement of large, annotated datasets scales the development of AI.

- Time and Cost Efficiency: It reduces the expense involved in labeling data and increases the speed of deploying the developed model.

- Supports Open World Learning: Models are trained to automatically identify and classify categories for which they have not been trained before.

- Improves the Flexibility of AI: Increase the level of all the AI systems for fast-changing environments.

Applications of Zero-Shot and Few-Shot Learning

| Application | Zero-Shot Learning | Few-Shot Learning |

| Natural Language Processing (NLP) | GPT and BERT models can perform translations even if not directly trained for the target languages. | Sentiment analysis improves with text classification model fine-tuning, even with scarce labeled data. |

| Computer Vision | Auxiliary learning of attributes helps image recognition systems to detect unknown objects. | AI for medical imaging can identify infrequently documented disorders with minimal patient data. |

| Chatbots and Virtual Assistants | AI assistants can discuss unfamiliar topics without any prior instruction. | Automated responders adjust their replies based on previously observed minimal conversation elements. |

| Autonomous Systems | Contextual learning enables self-driving cars to understand novel road signs. | With minor alterations to their code, industrial robots can be taught new tasks. |

Challenges in Zero-Shot and Few-Shot Learning

- Inaccuracy from Lack of Training Examples: Attempting to generalize based on no examples can be inaccurate.

- The Gap of Semantics: Finding helpful information about known and unknown categories is still tricky.

- Peripheral and Superfluous: Few-shot models are more prone to errors, lowering accuracy and reliability.

- Advanced understanding of meta: Learning and embedding pose a challenge in execution.

Best Practices for Implementing ZSL and FSL

Use Pre-Trained Models

- Use CLIP by OpenAI or T5 from Google to enhance knowledge flow.

- Make use of transfer learning to refine the model with little effort.

Leverage External Knowledge Sources

- Employ semantic embeddings from NLP processors such as Word2Vec, BERT, and GPT.

- Structure references like Wikipedia and ConceptNet.

Optimize with Meta-Learning

- Employ a few shot learning strategies with Prototypical Networks or MAML.

- Apply episodic training to enhance learning capabilities.

Enhance Model Interpretability

- Examine how XAI makes predictions particular to the zero-shot problem.

- Visualize decision boundaries to enhance accuracy.

Future of Zero-Shot Learning

- Broader Generalization: The models will be able to utilize the semantics of category names with knowledge graphs to interpret entirely unseen categories.

- Enhanced Semantic Understanding: Understanding new concepts without providing examples will be possible due to the integration of advanced linguistic and semantic embeddings into AI.

- Cross-Domain Adaptability: The model can transfer what is learned from one domain or industry to another, even when the domain or industry is entirely different.

- Knowledge Graph Integration: Overcoming challenges in AI model comprehension will require developing background knowledge that relies more on structured knowledge and ontologies.

- Scalability in Real-world Applications: Regular encounters with new situations make this technology particularly useful in areas such as virtual assistants, self-driving cars, and automated healthcare diagnostics.

- Enhancing Explainability: Focusing on reason-based explanations that improve user trust and adoption, especially in critical situations like healthcare and finance, where AI decisions require further trust and rely on systems that explain their reasoning behind the decisions made, needs higher trust engagement.

Future of Few-Shot Learning

- Advanced Meta-Learning Techniques: Extremely complex meta-learning structures will enable AI models to shift between tasks with little or no new data collection, drastically reducing the effort required of the model and increasing the effort required of the algorithm.

- Continual and Lifelong Learning: The model will support lifelong learning through incremental learning from small new data sets, facilitating models that evolve dynamically over time.

- Integration with Large Pretrained Models: Adjusting the output of foundational models, like language and vision transformer models, so that they can be specifically instructed using a few provided examples for efficient task execution.

- Automation in Labeling and Annotation: The automatic and semi-automatic labeling techniques have reduced the manual annotation effort, resulting in improved efficiency and reduced costs for few-shot learning.

- Enhanced Transferability Across Tasks: The models will be more proficient in using knowledge gained in performing one few-shot learning task to complete another, even across dissimilar modalities (text, vision, audio).

- Industry-Specific Customization: Increased and specialized use in sectors like healthcare, finances, and robotics, where data collection is difficult or delicate, allows the AI to perform remarkably with very limited data.

Conclusion

Zero-shot and few-shot learning have fundamentally revolutionized AI, enabling models to learn with little or no labeled data. These approaches offer new forms of efficiency alongside flexibility and scalability to AI development across different industries.

Parallel to the advancement of artificial intelligence, businesses and researchers ought to look for ways to improve machine learning models and bring a smart AI system within reach using these two learning methods.

Are you ready to implement zero-shot and few-shot learning in your AI projects? Start using pre-trained models and meta-learning today!